“Why I Look at Data Differently” by Emily Oster

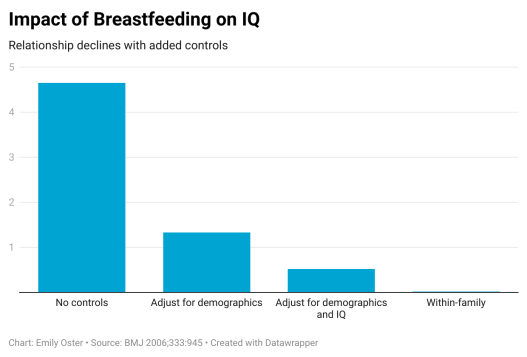

A really thorough and easy to read discussion about the nature of data collection and different types of studies and the nature of the conclusions we can draw from them. Really useful for discussions in the Human or Natural Sciences. She covers the concept of data broadly but supports with many different examples include breastfeeding and IQ.

The question of whether a controlled effect in observational data is “causal” is inherently unanswerable. We are worried about differences between people that we cannot observe in the data. We can’t see them, so we must speculate about whether they are there. Based on a couple of decades of working intensely on these questions in both my research and my popular writing, I think they are almost always there. I think they are almost always important, and that a huge share of the correlations we see in observational data are not close to causal.